Algorithmic PETs

Contents

Algorithmic PETs#

Algorithmic PETs rely on altercating of the representation of the data to secure privacy while retaining the information necessary for adequate utility. Four techniques will be touched upon; differential privacy, homomorphic encryption, zero-knowledge proofs and aggregation of the data.

Differential privacy (DP)#

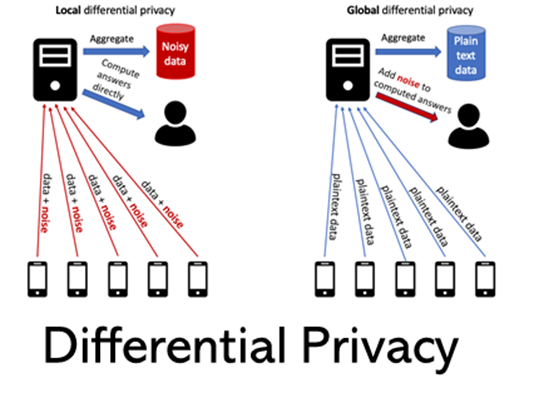

Differential privacy defines privacy as the inability for an adversary to tell whether an individual is part of the dataset or not, give complete knowledge of the individual’s data and all other records in the dataset. DP ensures this by obscuring the data in such a way that the inclusion or exclusion of one individual’s record makes no statistical difference one the output . This is achieved by adding noise which can be generated by transformation of the data (for example Laplacian or neighbor distance) or manipulation.

Fig. 8 Demonstrating two types of DP, where noise is added either locally or on the global level.#

The model for generating noise is known to the maintainer of the data and can therefore be included in subsequent analyses or machine learning applications. This allows for the ‘deciphering’ of the noisy data to maintain high utility. DP is thus a reversible PET. With the right key, one can convert back to the sensitive data. DP is a robust set of PET and measures to determine its success are well defined (epsilon-differential privacy).

Implementations

Implementations are widely available. Google has C++, Go and Java algorithms, Microsoft has open-sourced a Rust-based library with Python bindings called SmartNoise and there is the OpenDP community boasting an impressive array of free implementations and documentation for enterprise use of DP.

DP is not without serious drawbacks for the use in a health data ecosystem. The main problem lies in its inability to handle outliers in the data. These are often meaningful, both clinically and financially, in healthcare settings. Additionally, as with minimalization, linking a single subject over datasets is impossible without some compromise to privacy. Finally, it has trouble with time series data and cannot handle some of the state-of-the-art in machine learning models. This makes it a hard-to-implement PET for a well-functioning data ecosystem.

Homomorphic Encryption#

A second type of algorithmic PET is Homomorphic Encryption (HE). General encryption is a proven and successful method to increase the security and privacy protection of a dataset. However, when one needs to decrypt the data to analyze it, the data becomes sensitive once more. HE aims to solve this by enabling computational analysis of encrypted data. It can be typically used when one wants to outsource computational tasks to a third party where the data to be send it sensitive in nature. Under HE, the encrypted data is sent to a third party, which runs a model on the encrypted data. Once the model is finished, the encrypted results are sent back to the original data owner who can decrypt the results with the relevant private key.

There are two core features of HE. First feature consist of semantic security. An adversary will be unable to distinguish whether a piece of ciphertext is the encrypted version out of a collection of different plaintexts. The second feature is concerned with one-wayness. In the case of an electronic health record, an adversary cannot link a piece of ciphertext to the corresponding plaintext based solely on the public key of a patient held by a data owner (used to link a patient to their health record). HE has been demonstrated in health data research to have been a secure option to train deep learning models without having the model owner see the original data or the model parameters. MORE (Matrix Operation for Randomization or Encryption) is an full HE implementation (FHE) that allows a third party to train models on the sensitive data without compromising privacy or utility 12. The main downside of any full HE scheme is the overhead posed on the system. It requires vast amounts of memory and processing power to enable the training machine learning models. Alternatively, one could alleviate some of the stringent requirements to gain processing speed. However, this results in compromising the privacy of the data. Another downside is the network aspect. FHE is suited for a third party analyzing the data when time is not of the essence (outsourced analytics). However, when an ecosystem is the goal, insight of data and subsequent models need to be transferred over multiple parties. For example, three parties can have data they want to pool and analyze together, while protecting the sensitive nature of their data. FHE is less suited for this type interaction between several parties. Another branch PETs, called secure Multiparty computation (MPC), remedies this by enabling joint computation in an encrypted space, building on the FHE principles. More on MPC can be found under the [Model-to-data PETs](Model-to-Data PETs) chapter.

Implementations

For a Python library with HE implementations, both simple math operations and machine learning applications, see Pyfhel.

Zero-Knowledge Proofs#

A third method of algorithmic PETs is aimed at giving a legitimate actor access to data that it is allowed to use. These Zero-Knowledge Proofs (ZKP) are aimed at verifying the digital identity and data of an actor without explicitly sharing the data itself. According to Preuveneers & Joosen (2016)3 : “A ZKP enables the prover to make sure the verifier is certain some statements are correct, but the verifier does not learn anything except the validity of the statement.” An example would be the COVID-19 vaccine passport used in the EU. By scanning a QR-code, anybody could inquire if the person in front of them was indeed vaccinated, while obtaining a small portion of personal data to verify if the user is who they say they are (birthdate). ZKP are a relatively young branch of PETs but already demonstrates promising results for large scale communication between devices in health data ecosystems, such Internet of Things networks between medical sensors or sharing reports between several care providers (e.g.: hospitals and GPs). The main challenge in ZKP lies in its reliance on the data quality of the underlying data, which cannot be verified by other members in the ecosystem. Additionally, lack of machine learning applications make it limited to simple data exchanges, thus limiting its potential for SME ecosystems developing services employing any automated learning or other analytical operations on the data.

Implementations

To learn more on ZKP and how to implement them, read the excellent Stanford Code guide.

Aggregation of data#

The fourth method of algorithmic PET is aggregation of data. By providing summary statistics such as means, medians or correlations, meaningful properties of data can be extracted and shared while masking the unique values of the subjects making up the data. Aggregation of data is often used by public bodies and institutions such as Statistics Netherlands (CBS) to share data publicly. Aggregation, just as minimalization, are irreversible. Once the sensitive data has been removed, it cannot be retrieved again. As with the methods described under minimalization, utility of the data is greatly reduced by this way and therefore this PET is of limited use for a data ecosystem.

Resource

Additional information on aggregation of data can be found here which provides an overview on the methods of many national statistics offices around the globe.

- 1

Muhammed, K. J., & Gbolagade, K. A. (2019, December). Enhanced MORE algorithm for fully homomorphic encryption based on secret information moduli set. In 2019 IEEE International Conference on Industrial Engineering and Engineering Management (IEEM) (pp. 469-473). IEEE.

- 2

Vizitiu, A., Niƫă, C. I., Puiu, A., Suciu, C., & Itu, L. M. (2020). Applying deep neural networks over homomorphic encrypted medical data. Computational and mathematical methods in medicine, 2020.

- 3

Preuveneers D, Joosen W. Privacy-enabled remote health monitoring applications for resource constrained wearable devices. Proc ACM Symp Appl Comput. (2016) 04:119–124. 10.1145/2851613.2851683